Googlebot Optimization for Better SEO | Think beyond SEO

Googlebot Optimization for Better SEO | Think beyond SEO

Howdy readers, Have you heard about Googlebot optimization? You know very well about search engine optimization, either it is on-page SEO, off-page SEO or Image SEO. We have also talked about WordPress website clean-up and optimization for better SEO practices. So, it will be better to see how can you optimize your Googlebot?

Googlebot optimization is not the same as WordPress optimization or Search Engine Optimization. It is quite different. Here the main focus is how Google’s crawler accesses your site? You know a site’s crawlability is the important first step to ensuring its searchability.

Here we will focus on all the best practices which are important for crawlability. So, let’s start this discussion with what is a Googlebot?

What is a Googlebot?

Googlebot is a Google search engine bot which crawls a website and helps in indexing a web link. It is also called crawler or web spider. A Googlebot can crawl only those links which they have given the access through a robots.txt file. Once it’s crawl any link it requests indexing and then that post will be given back everytime on a particular search query.

Type of Googlebot –

- Googlebot-Mobile

- Mediapartners-google

- Googlebot-Image

- Googlebot-Video

- Adsbot-Google

- Googlebot-News

- Google-Mobile-Apps

I have mentioned more about their working and special purpose in a separate article. i have also mentioned how

Read Recommended – What is a Googlebot? Googlebot SEO benefits, working principle & types

Googlebot optimization basic principles

The whole idea of how Googlebot crawls and index your site is crucial to understand the Googlebot optimization. Here we will see some basic principles which will help you to understand Googlebot working principles.

- Googlebot crawl budget for your site – The amount of time that Googlebot gives to crawl your site is called “crawl budget.” The greater a page’s authority, the more crawl budget it receives. So, sites having high domain authority and page authority will receive more crawl budget rather than a new site.

- Googlebot is always crawling your website – “Googlebot shouldn’t access your site more than once every few seconds on average .” Google says this in their Googlebot article. In other words, your site is always being crawled provided your site is accurately accepting Google web spiders. It’s important to note that every page on your site is not crawled by Googlebot all the time. Have you heard about consistent content marketing, this is the right time to punch into it – newly written, detailed, properly structured, and updated contents always gains the crawler’s attention, and improves the likelihood of top ranked pages.

- Robots.txt is accessed first by Googlebot to get crawling rules decided by you – Pages, posts, media, WordPress directories which are disallowed to access will not be accessed by Googlebot. If you don’t have a robot.txt file, create one and write your crawling rules there.

- Sitemap of your website is accessed by Googlebot to discover new contents, updated contents and other areas of your site to be indexed – You know when you create a WordPress website or any other website. Things and scenarios are different for all sites. There is a lot of variation in how a website is built. So, Googlebot may not crawl every page or section. It is sitemap of your site which will tell Google about your site data. Sitemaps are also beneficial for advising Google about the metadata behind categories like video, images, mobile, and news. You can easily create a sitemap using Yoast SEO and All In One SEO plugins. Once created submit your sitemap to Google Search console for proper indexing of links.

Read Also – How to create an XML sitemap for non-WordPress Website?

6 principles you should follow for Googlebot optimization

You should know Googlebot optimization comes to a step prior to the search engine optimization. It’s important that your site should accurately be crawled and indexed by Google. So, Let’s see what are those principles which you need to keep in mind.

1.Optimize your robots.txt file properly

Everytime when a Googlebot or other search engine bot comes to crawl your site. It goes to robots.txt file first. A robots.txt file tell them where to go and where not? So, robots.txt is essential for SEO. I have already discussed about crawl budget above. It’s something which is dependent on

So, you should always optimize your robots.txt, this will give enough crawl budget to the right section of your website. I know you don’t want to spend this limited resource to scan bullshit pieces of stuff on your website.

2. Create fresh and original contents

It is crucial for Googlebot optimization that your low ranked pages should be

For example – Initially the content was “Googlebot optimization and it’s benefits for SEO”. Now you changed it to ” optimizing Googlebot for better SEO practices”. These type of changes are good. You can do it.

See what Moz says about freshness of contents and its impact on Google ranking.

In the similar fashion originality of content also matters for Google. Google does not give more importance to those contents which are copyrighted or staled. I would recommend you to go with unique and detailed content always.

3. Internal linking can’t be ignored

Whenever I have talked about On-page SEO, I have always urged people to interlink the relevant articles. for example, you can see this article, I have interlinked my SEO, backlinks and previous Googlebot articles along with some other articles.

The more tight-knit and integrated your internal linking structure will be, the better it will be crawled by Google. You can use Yoast SEO, which suggests the articles which can be interlinked by content analysis.

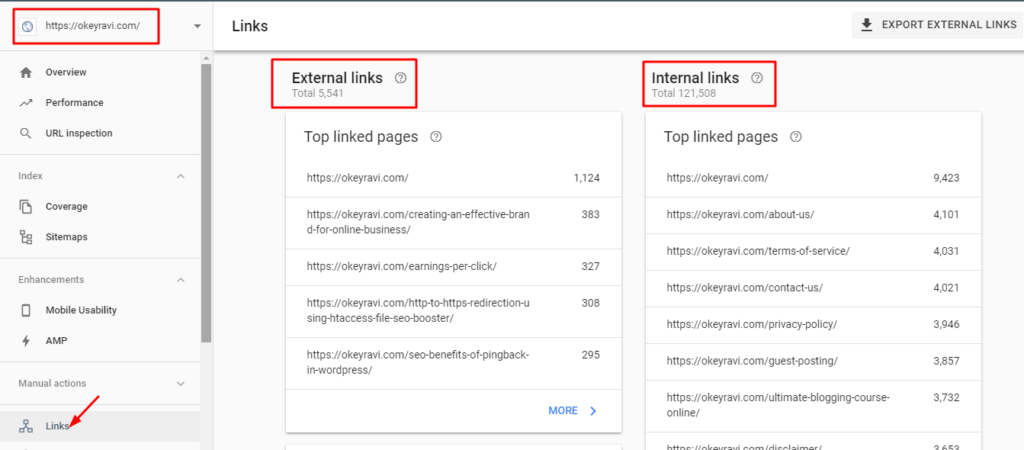

If you want to analyze your interlinking structure then you should visit

Google Search Console → Links → Internal Links. If you found the pages at the top of the list are strong content pages that you want to be returned in the SERPs, then you’re doing well.

4. Create a Sitemap for your website

I have focused more about the creation and importance of sitemap for a website in a separate article. Follow these article –

- How to create a sitemap for a non-WordPress website?

- How to create and submit a

sitemap for a WordPress Website?

5. Don’t get too fancy

Googlebot doesn’t crawl JavaScript, DHTML Frames, Flash, and Ajax content. So, adding these type of content on your website is not advisable. If you use WordPress then you can minimize Javascript and CSS file using WP rocket plugin.

The above mentioned things will increase your page load time also, So, reduce them as much as possible.

You can reach out this google article on how Google find your pages and how they understand your pages?

It is possible that in future

6. Infinite scrolling pages optimization

I have seen some bloggers use infinite scrolling pages on their site. So, for better Googlebot optimization, you need to ensure that your infinite scrolling pages comply with the stipulations provided by Google and also explained in Neil Patel article.

How to analyze Googlebot’s performance on your site?

Analyzing Googlebot performance is a crucial step in Googlebot optimization and it is quite simple to understand with the help of Google Webmaster tool.

The data provided by Google webmaster is limited but it will help you to find any serious error or major crawl issues on your blog.

Let’s see what you have to keep in mind when you look at the Googlebot performance in webmaster. Login to Google Search Console and Navigate to Coverage.

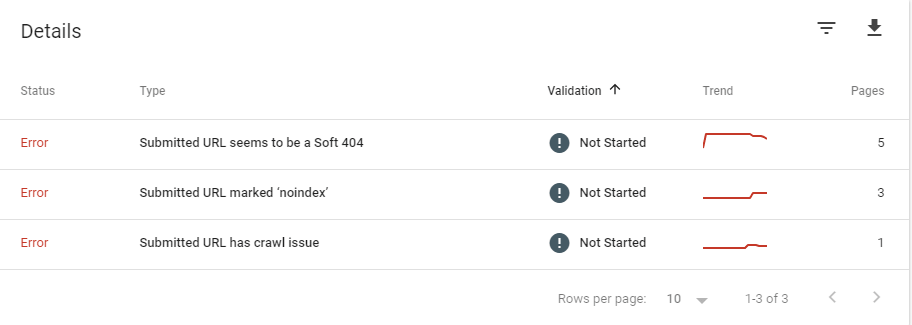

Resolve the crawl errors

Checking and fixing the crawl error is the first step if Googlebot optimization. In coverage, you can check, is there crawl error you have or not? if not, It’s awesome. But if you have some crawl error then you need to fix them immediately.

As you can see on my website there are 9 coverage errors. There is a single crawl issue in that. Here is the list of errors –

- Submitted URL seems to be a Soft 404

- URL submitted marked ‘noindex’

- Submitted URL has crawl issue

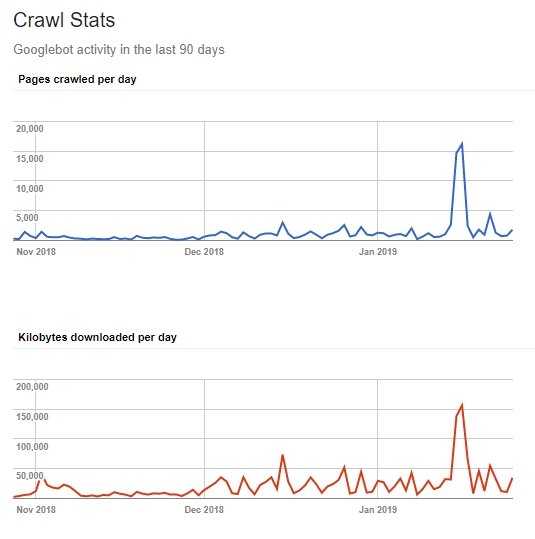

Keep an eye on your crawl stats

Google tells you about pages crawled per day, kilobytes downloaded per day and Time spent downloading a page (in milliseconds). A proactive content marketing campaign that regularly pushes fresh content will provide positive upward momentum for these stats

Take help from “fetch as Google” feature

The “Fetch as Google” feature allows you to look at your site or individual web pages the way that Google would. You can fetch as Google tab to fetch any page having crawl errors and then once completed, you can request indexing to the Google. It is applicable in other errors also like soft 404, clickable elements too close together, content out of page and more.

Read also – Fast Google Indexing | Indexing errors on Google

Check status of blocked URL’s

If you want to check any URL is blocked by Googlebot or not then you can use the robots.txt tester. You can check it with different Googlebots.

If a legitimate link is blocked then you should make changes accordingly in your robots.txt file to solve that error.

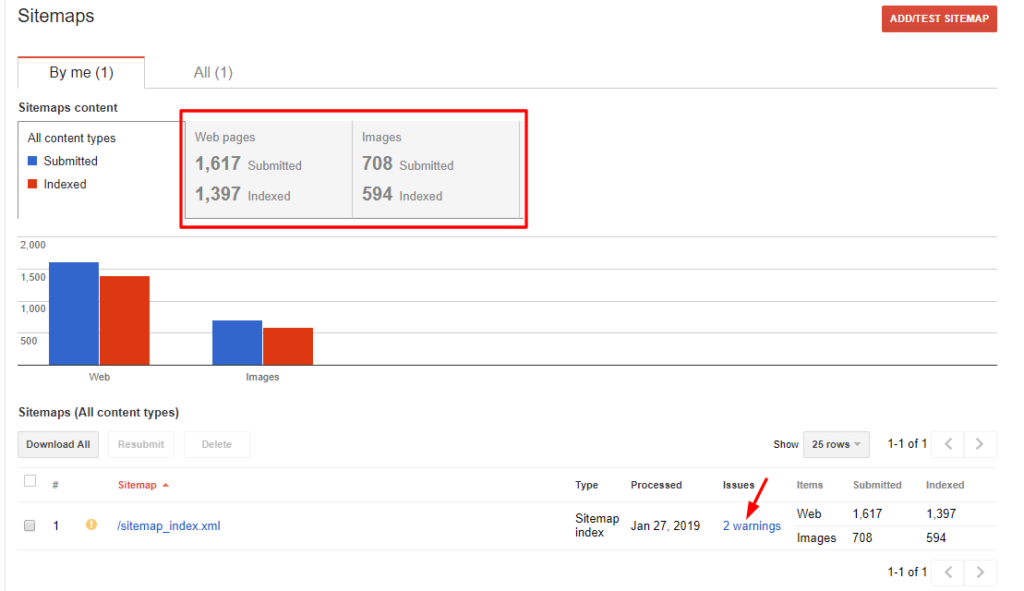

Keep your sitemap updated

You should check your sitemap status time to time to analyze how many links are discovered? how many images are discovered? and how many of them have been indexed yet?

URL parameters configuration

If you want to know how a URL parameter configuration work then you should visit this Google article. Mostly it is applicable when you have duplicate contents on your site.

Navigate to URL Parameter sin Search console crawl report and see if you are getting this “Currently Googlebot isn’t experiencing problems with coverage of your site, so you don’t need to configure URL parameters. (Incorrectly configuring parameters can result in pages from your site being dropped from our index, so we don’t recommend you use this tool unless necessary.) “ then well and good. You don’t need to configure your URL parameter

Conclusion on Googlebot optimization

We all strive for a decent traffic. In accordance to that we do proper SEO, we write detailed content and that works for us. But not at a extent it should. So, Googlebot optimization is key. Most of the webmaster forget to optimize their Googlebot performance and they are losing a lot of traffic due to this.

So, you don’t need to do this mistake. Optimize your Googlebot performance and see how it works for you?

If you liked this article, then please subscribe to our YouTube Channel. You can also find us on Twitter and join our Digital marketing hacks Facebook group.

If you have any query or suggestion, comment below.

Thanks for reading.

Comments (5)

Rakesh Sain

Hello Ravi sir,

I am New in blogging , My Website is apnaresults.com . I found ‘Submitted URL seems to be a Soft 404″ Error on many pages on my website please Let me know what can I do.

Okey Ravi

Hi Rakesh,

404 means the submitted URLs are not valid now. Please check whatever you have submitted is working. Don’t submit all types of the link to google. Only the relevant one.

Semtitans

This is really a great article, this helped me to understand Google search engine bot principles. Thanks for the article.

Vijay Kumar

Hello sir,

Great article once again, Thank you for sharing Googlebot optimization techniques.

Your contents are unique form other site. Thank you for sharing your experience with us.

I am glad to read your article

Hope you are enjoying the day

Regards

-vj

Okey Ravi

Thanks, Vijay,

Great to see you again. You should also optimize your Googlebot performance to see the better results in the future.

Comments are closed.